A | B | C | D | E | F | G | H | CH | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

| Part of a series on |

| Evidence-based practices |

|---|

|

|

Metascience (also known as meta-research) is the use of scientific methodology to study science itself. Metascience seeks to increase the quality of scientific research while reducing inefficiency. It is also known as "research on research" and "the science of science", as it uses research methods to study how research is done and find where improvements can be made. Metascience concerns itself with all fields of research and has been described as "a bird's eye view of science".[1] In the words of John Ioannidis, "Science is the best thing that has happened to human beings ... but we can do it better."[2]

In 1966, an early meta-research paper examined the statistical methods of 295 papers published in ten high-profile medical journals. It found that "in almost 73% of the reports read ... conclusions were drawn when the justification for these conclusions was invalid." Meta-research in the following decades found many methodological flaws, inefficiencies, and poor practices in research across numerous scientific fields. Many scientific studies could not be reproduced, particularly in medicine and the soft sciences. The term "replication crisis" was coined in the early 2010s as part of a growing awareness of the problem.[3]

Measures have been implemented to address the issues revealed by metascience. These measures include the pre-registration of scientific studies and clinical trials as well as the founding of organizations such as CONSORT and the EQUATOR Network that issue guidelines for methodology and reporting. There are continuing efforts to reduce the misuse of statistics, to eliminate perverse incentives from academia, to improve the peer review process, to systematically collect data about the scholarly publication system,[4] to combat bias in scientific literature, and to increase the overall quality and efficiency of the scientific process. As such, metascience is a big part of methods underlying the Open Science Movement.

History

In 1966, an early meta-research paper examined the statistical methods of 295 papers published in ten high-profile medical journals. It found that, "in almost 73% of the reports read ... conclusions were drawn when the justification for these conclusions was invalid."[6] A paper in 1976 called for funding for meta-research: "Because the very nature of research on research, particularly if it is prospective, requires long periods of time, we recommend that independent, highly competent groups be established with ample, long term support to conduct and support retrospective and prospective research on the nature of scientific discovery".[7] In 2005, John Ioannidis published a paper titled "Why Most Published Research Findings Are False", which argued that a majority of papers in the medical field produce conclusions that are wrong.[5] The paper went on to become the most downloaded paper in the Public Library of Science[8][9] and is considered foundational to the field of metascience.[10] In a related study with Jeremy Howick and Despina Koletsi, Ioannidis showed that only a minority of medical interventions are supported by 'high quality' evidence according to The Grading of Recommendations Assessment, Development and Evaluation (GRADE) approach.[11] Later meta-research identified widespread difficulty in replicating results in many scientific fields, including psychology and medicine. This problem was termed "the replication crisis". Metascience has grown as a reaction to the replication crisis and to concerns about waste in research.[12]

Many prominent publishers are interested in meta-research and in improving the quality of their publications. Top journals such as Science, The Lancet, and Nature, provide ongoing coverage of meta-research and problems with reproducibility.[13] In 2012 PLOS ONE launched a Reproducibility Initiative. In 2015 Biomed Central introduced a minimum-standards-of-reporting checklist to four titles.

The first international conference in the broad area of meta-research was the Research Waste/EQUATOR conference held in Edinburgh in 2015; the first international conference on peer review was the Peer Review Congress held in 1989.[14] In 2016, Research Integrity and Peer Review was launched. The journal's opening editorial called for "research that will increase our understanding and suggest potential solutions to issues related to peer review, study reporting, and research and publication ethics".[15]

Fields and topics of meta-research

Metascience can be categorized into five major areas of interest: Methods, Reporting, Reproducibility, Evaluation, and Incentives. These correspond, respectively, with how to perform, communicate, verify, evaluate, and reward research.[1]

Methods

Metascience seeks to identify poor research practices, including biases in research, poor study design, abuse of statistics, and to find methods to reduce these practices.[1] Meta-research has identified numerous biases in scientific literature.[16] Of particular note is the widespread misuse of p-values and abuse of statistical significance.[17]

Scientific data science

Scientific data science is the use of data science to analyse research papers. It encompasses both qualitative and quantitative methods. Research in scientific data science includes fraud detection[18] and citation network analysis.[19]

Journalology

Journalology, also known as publication science, is the scholarly study of all aspects of the academic publishing process.[20][21] The field seeks to improve the quality of scholarly research by implementing evidence-based practices in academic publishing.[22] The term "journalology" was coined by Stephen Lock, the former editor-in-chief of The BMJ. The first Peer Review Congress, held in 1989 in Chicago, Illinois, is considered a pivotal moment in the founding of journalology as a distinct field.[22] The field of journalology has been influential in pushing for study pre-registration in science, particularly in clinical trials. Clinical-trial registration is now expected in most countries.[22]

Reporting

Meta-research has identified poor practices in reporting, explaining, disseminating and popularizing research, particularly within the social and health sciences. Poor reporting makes it difficult to accurately interpret the results of scientific studies, to replicate studies, and to identify biases and conflicts of interest in the authors. Solutions include the implementation of reporting standards, and greater transparency in scientific studies (including better requirements for disclosure of conflicts of interest). There is an attempt to standardize reporting of data and methodology through the creation of guidelines by reporting agencies such as CONSORT and the larger EQUATOR Network.[1]

Reproducibility

The replication crisis is an ongoing methodological crisis in which it has been found that many scientific studies are difficult or impossible to replicate.[23][24] While the crisis has its roots in the meta-research of the mid- to late 20th century, the phrase "replication crisis" was not coined until the early 2010s[25] as part of a growing awareness of the problem.[1] The replication crisis has been closely studied in psychology (especially social psychology) and medicine,[26][27] including cancer research.[28][29] Replication is an essential part of the scientific process, and the widespread failure of replication puts into question the reliability of affected fields.[30]

Moreover, replication of research (or failure to replicate) is considered less influential than original research, and is less likely to be published in many fields. This discourages the reporting of, and even attempts to replicate, studies.[31][32]

Evaluation and incentives

Metascience seeks to create a scientific foundation for peer review. Meta-research evaluates peer review systems including pre-publication peer review, post-publication peer review, and open peer review. It also seeks to develop better research funding criteria.[1]

Metascience seeks to promote better research through better incentive systems. This includes studying the accuracy, effectiveness, costs, and benefits of different approaches to ranking and evaluating research and those who perform it.[1] Critics argue that perverse incentives have created a publish-or-perish environment in academia which promotes the production of junk science, low quality research, and false positives.[33][34] According to Brian Nosek, "The problem that we face is that the incentive system is focused almost entirely on getting research published, rather than on getting research right."[35] Proponents of reform seek to structure the incentive system to favor higher-quality results.[36] For example, by quality being judged on the basis of narrative expert evaluations ("rather than indices"), institutional evaluation criteria, guaranteeing of transparency, and professional standards.[37]

Contributorship

Studies proposed machine-readable standards and (a taxonomy of) badges for science publication management systems that hones in on contributorship – who has contributed what and how much of the research labor – rather that using traditional concept of plain authorship – who was involved in any way creation of a publication.[38][39][40][41] A study pointed out one of the problems associated with the ongoing neglect of contribution nuanciation – it found that "the number of publications has ceased to be a good metric as a result of longer author lists, shorter papers, and surging publication numbers".[42]

Assessment factors

Factors other than a submission's merits can substantially influence peer reviewers' evaluations.[43] Such factors may however also be important such as the use of track-records about the veracity of a researchers' prior publications and its alignment with public interests. Nevertheless, evaluation systems – include those of peer-review – may substantially lack mechanisms and criteria that are oriented or well-performingly oriented towards merit, real-world positive impact, progress and public usefulness rather than analytical indicators such as number of citations or altmetrics even when such can be used as partial indicators of such ends.[44][45] Rethinking of the academic reward structure "to offer more formal recognition for intermediate products, such as data" could have positive impacts and reduce data withholding.[46]

Recognition of training

A commentary noted that academic rankings don't consider where (country and institute) the respective researchers were trained.[47]

Scientometrics

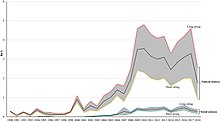

Scientometrics concerns itself with measuring bibliographic data in scientific publications. Major research issues include the measurement of the impact of research papers and academic journals, the understanding of scientific citations, and the use of such measurements in policy and management contexts.[48] Studies suggest that "metrics used to measure academic success, such as the number of publications, citation number, and impact factor, have not changed for decades" and have to some degrees "ceased" to be good measures,[42] leading to issues such as "overproduction, unnecessary fragmentations, overselling, predatory journals (pay and publish), clever plagiarism, and deliberate obfuscation of scientific results so as to sell and oversell".[49]

Novel tools in this area include systems to quantify how much the cited-node informs the citing-node.[50] This can be used to convert unweighted citation networks to a weighted one and then for importance assessment, deriving "impact metrics for the various entities involved, like the publications, authors etc"[51] as well as, among other tools, for search engine- and recommendation systems.

Science governance

Science funding and science governance can also be explored and informed by metascience.[52]

Incentives

Various interventions such as prioritization can be important. For instance, the concept of differential technological development refers to deliberately developing technologies – e.g. control-, safety- and policy-technologies versus risky biotechnologies – at different precautionary paces to decrease risks, mainly global catastrophic risk, by influencing the sequence in which technologies are developed.[53][54] Relying only on the established form of legislation and incentives to ensure the right outcomes may not be adequate as these may often be too slow[55] or inappropriate.

Other incentives to govern science and related processes, including via metascience-based reforms, may include ensuring accountability to the public (in terms of e.g. accessibility of, especially publicly-funded, research or of it addressing various research topics of public interest in serious manners), increasing the qualified productive scientific workforce, improving the efficiency of science to improve problem-solving in general, and facilitating that unambiguous societal needs based on solid scientific evidence – such as about human physiology – are adequately prioritized and addressed. Such interventions, incentives and intervention-designs can be subjects of metascience.

Science funding and awards

Scientific awards are one category of science incentives. Metascience can explore existing and hypothetical systems of science awards. For instance, it found that work honored by Nobel prizes clusters in only a few scientific fields with only 36/71 having received at least one Nobel prize of the 114/849 domains science could be divided into according to their DC2 and DC3 classification systems. Five of the 114 domains were shown to make up over half of the Nobel prizes awarded 1995–2017 (particle physics , cell biology , atomic physics , neuroscience , molecular chemistry ).[57][58]

A study found that delegation of responsibility by policy-makers – a centralized authority-based top-down approach – for knowledge production and appropriate funding to science with science subsequently somehow delivering "reliable and useful knowledge to society" is too simple.[52]

Measurements show that allocation of bio-medical resources can be more strongly correlated to previous allocations and research than to burden of diseases.[59]

A study suggests that "f peer review is maintained as the primary mechanism of arbitration in the competitive selection of research reports and funding, then the scientific community needs to make sure it is not arbitrary".[43]

Studies indicate there to is a need to "reconsider how we measure success" ().[42]

- Funding data

Funding information from grant databases and funding acknowledgment sections can be sources of data for scientometrics studies, e.g. for investigating or recognition of the impact of funding entities on the development of science and technology.[60]

Research questions and coordination

Risk governanceedit

Science communication and public useedit

It has been argued that "science has two fundamental attributes that underpin its value as a global public good: that knowledge claims and the evidence on which they are based are made openly available to scrutiny, and that the results of scientific research are communicated promptly and efficiently".[61] Metascientific research is exploring topics of science communication such as media coverage of science, science journalism and online communication of results by science educators and scientists.[62][63][64][65] A study found that the "main incentive academics are offered for using social media is amplification" and that it should be "moving towards an institutional culture that focuses more on how these or such platforms can facilitate real engagement with research".[66] Science communication may also involve the communication of societal needs, concerns and requests to scientists.

Alternative metrics toolsedit

Alternative metrics tools can be used not only for help in assessment (performance and impact)[59] and findability, but also aggregate many of the public discussions about a scientific paper in social media such as reddit, citations on Wikipedia, and reports about the study in the news media which can then in turn be analyzed in metascience or provided and used by related tools.[67] In terms of assessment and findability, altmetrics rate publications' performance or impact by the interactions they receive through social media or other online platforms,[68] which can for example be used for sorting recent studies by measured impact, including before other studies are citing them. The specific procedures of established altmetrics are not transparent[68] and the used algorithms can not be customized or altered by the user as open source software can. A study has described various limitations of altmetrics and points "toward avenues for continued research and development".[69] They are also limited in their use as a primary tool for researchers to find received constructive feedback.

Societal implications and applicationsedit

It has been suggested that it may benefit science if "intellectual exchange—particularly regarding the societal implications and applications of science and technology—are better appreciated and incentivized in the future".[59]

Knowledge integrationedit

Primary studies "without context, comparison or summary are ultimately of limited value" and various types[additional citation(s) needed] of research syntheses and summaries integrate primary studies.[70] Progress in key social-ecological challenges of the global environmental agenda is "hampered by a lack of integration and synthesis of existing scientific evidence", with a "fast-increasing volume of data", compartmentalized information and generally unmet evidence synthesis challenges.[71] According to Khalil, researchers are facing the problem of too many papers – e.g. in March 2014 more than 8,000 papers were submitted to arXiv – and to "keep up with the huge amount of literature, researchers use reference manager software, they make summaries and notes, and they rely on review papers to provide an overview of a particular topic". He notes that review papers are usually (only)" for topics in which many papers were written already, and they can get outdated quickly" and suggests "wiki-review papers" that get continuously updated with new studies on a topic and summarize many studies' results and suggest future research.[72] A study suggests that if a scientific publication is being cited in a Wikipedia article this could potentially be considered as an indicator of some form of impact for this publication,[68] for example as this may, over time, indicate that the reference has contributed to a high-level of summary of the given topic.

Science journalismedit

Science journalists play an important role in the scientific ecosystem and in science communication to the public and need to "know how to use, relevant information when deciding whether to trust a research finding, and whether and how to report on it", vetting the findings that get transmitted to the public.[73]

Science educationedit

Zdroj:https://en.wikipedia.org?pojem=Metascience_(research)Text je dostupný za podmienok Creative Commons Attribution/Share-Alike License 3.0 Unported; prípadne za ďalších podmienok. Podrobnejšie informácie nájdete na stránke Podmienky použitia.

Antropológia

Aplikované vedy

Bibliometria

Dejiny vedy

Encyklopédie

Filozofia vedy

Forenzné vedy

Humanitné vedy

Knižničná veda

Kryogenika

Kryptológia

Kulturológia

Literárna veda

Medzidisciplinárne oblasti

Metódy kvantitatívnej analýzy

Metavedy

Metodika

Text je dostupný za podmienok Creative

Commons Attribution/Share-Alike License 3.0 Unported; prípadne za ďalších

podmienok.

Podrobnejšie informácie nájdete na stránke Podmienky

použitia.

www.astronomia.sk | www.biologia.sk | www.botanika.sk | www.dejiny.sk | www.economy.sk | www.elektrotechnika.sk | www.estetika.sk | www.farmakologia.sk | www.filozofia.sk | Fyzika | www.futurologia.sk | www.genetika.sk | www.chemia.sk | www.lingvistika.sk | www.politologia.sk | www.psychologia.sk | www.sexuologia.sk | www.sociologia.sk | www.veda.sk I www.zoologia.sk